We have a saying in InfoSec: we’re always preparing for when — not if — we will be targeted. So we have to focus on getting tools and visibility necessary to quickly detect and respond to security incidents, especially those that put our intellectual property at risk.

When it comes to protecting intellectual property and personally identifiable information, a Data Loss Prevention (DLP) program is an important cornerstone.

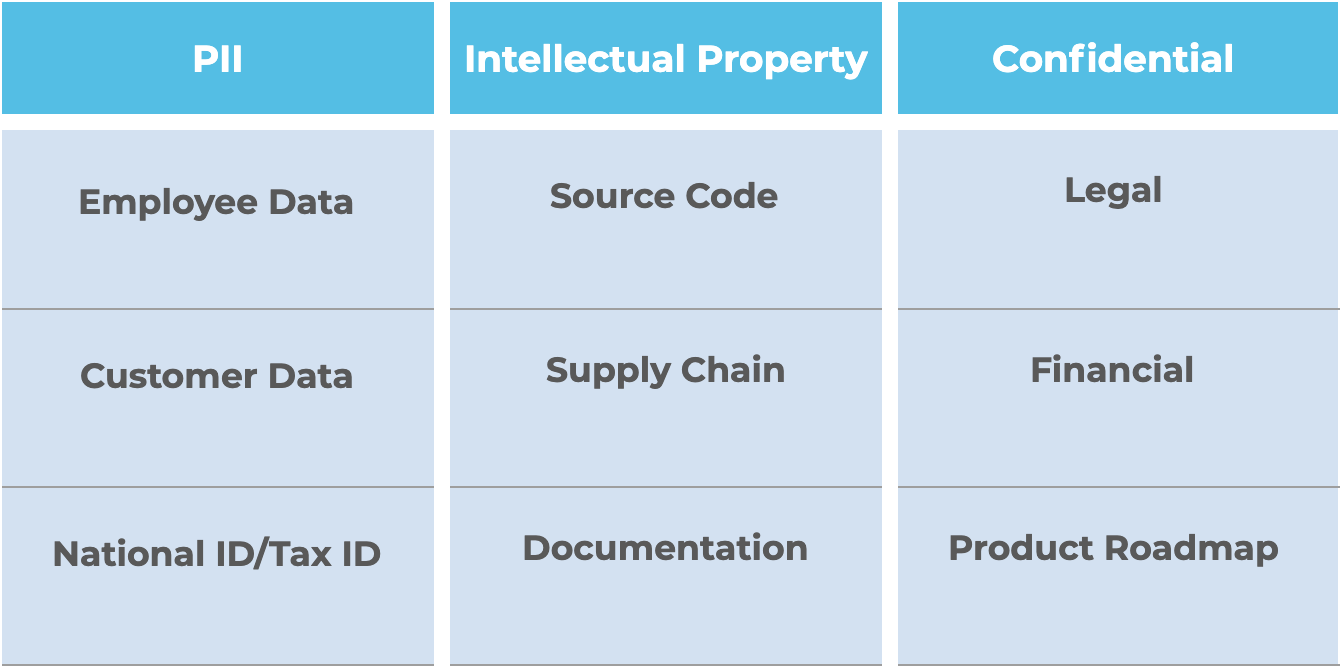

At Palo Alto Networks, we provide security products and services for over 85,000 customers around the world. Some of our most sacred assets are our own data, including data we hold about our employees, customers, source code and documentation related to our products and services. We monitor these key areas as part of our DLP program.

For DLP monitoring, we consider three main risks:

- Accidental Exposure: While most employees have the best intentions, mistakes can happen. User education will help prevent accidental exposure, but we need to be able to quickly detect and respond when our most critical data is in play. We also need to configure our applications to take advantage of privacy and role-based access controls.

- External Attack: Like most other types of security incidents, we’re always on the lookout for externally-sourced attempts to access and exfiltrate our data.

- Insider Threat: Perhaps one of the most critical for organizations is identifying bad behavior on the part of your trusted resources: your employees. We need to have a smart way for monitoring and analysis in order to detect threats that are already inside our perimeter.

The foundations of our DLP program include:

- IT and InfoSec policy to outline data classification

- The required care and handling for that data

- User education

- Detection and remediation controls

Policy is very important because we need to be able to point to the rules when enforcing them. Policy controls help ensure that applications and workflows meet minimum standards for privacy and security and provide boundaries for users as they go about their work. However, the old saying that rules are meant to be broken could apply here – but with a twist, and that is that rules will be broken.

Avoid the Frankenstein Approach

There are a number of challenges we need to account for when introducing DLP controls. First, an increasing amount of data is encrypted which makes it difficult to inspect. Secondly, automated DLP detection is not perfect; there is always potential for misclassification. These two factors also mean some DLP controls can be circumvented by a human threat relatively easily.

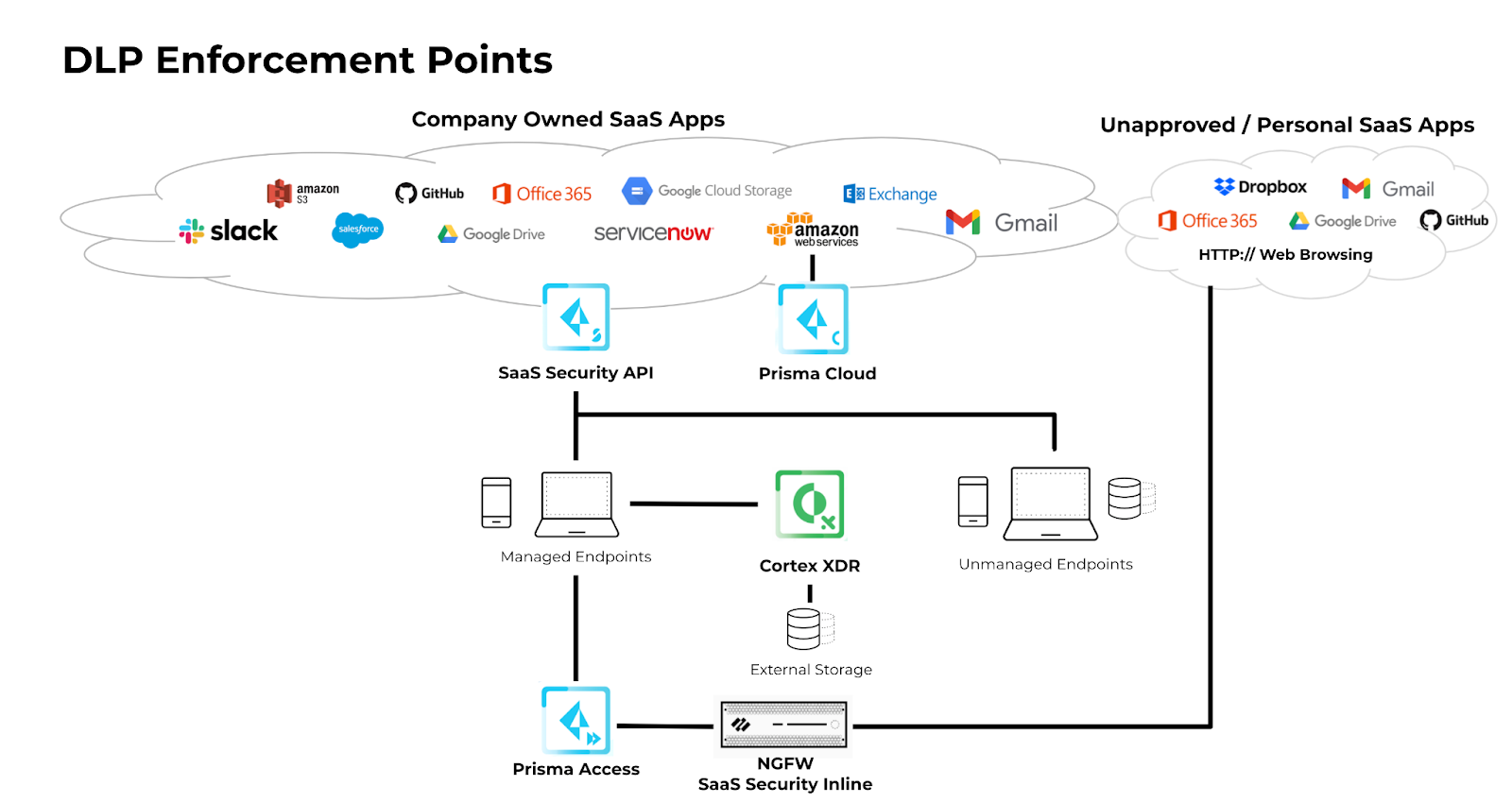

Our ability to monitor effectively and what sets us apart from our competitors is Palo Alto Networks’ platform-centric approach to security. Our data is everywhere: It’s on user endpoints, it’s in motion inside our networks, and it’s in the cloud. Trying to enable detection and response controls using the “Frankenstein approach” where different detection tools are used for monitoring different things means extra work for the security team and more room for error.

Our Enterprise DLP solution centralizes the detection component and helps us take action by answering the Three Whats:

- What is the data?

- What is the risk?

- What action should be taken?

This simplifies our analysis because we can rely on all of our sensors and enforcement points to submit data to a single detection engine, being the Enterprise DLP Service. That also moves configuration of detection policy from different sensors into one centralized location that can detect across the enterprise.

The Enterprise DLP Service uses two components to do detection of sensitive data: regular expression and machine learning. Regular expressions allow me as an end user to configure custom detections that are relevant to my own local use cases and machine learning can help more accurately detect broad categories of data that will likely be of interest for any organization to monitor, like financial or legal documents. Activity Logging also augments our detection, by analyzing patterns of behavior as opposed to looking for the sensitive data itself.

Automation vs Accuracy: It’s a Balancing Act

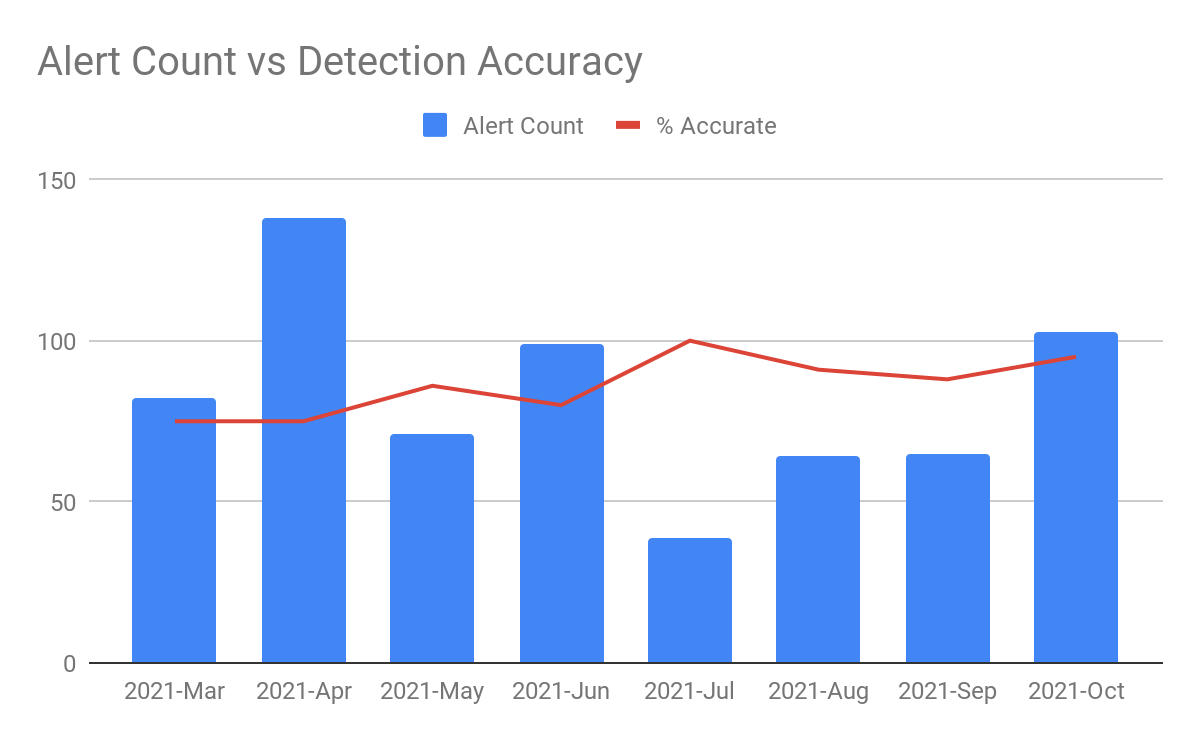

Using these detections, we trigger security alerts that are then investigated and responded to by the Security Operations Center (SOC) using Cortex XSOAR automation. An automated email is sent to the violating user and their manager to confirm whether the data movement was expected activity. Our success metrics in this area are defined by the quantity of alerts vs. the accuracy of the detection. We don’t want to be sending out alerts for inaccurate detections, so we put a lot of care into customizing detections to keep the accuracy as close to 100% as possible.

The trend we generally see is as alert volume increases, accuracy tends to dip, so we perform a cycle of review to get the alerts back on track, and then monitor for accuracy to climb again before introducing new alerts.

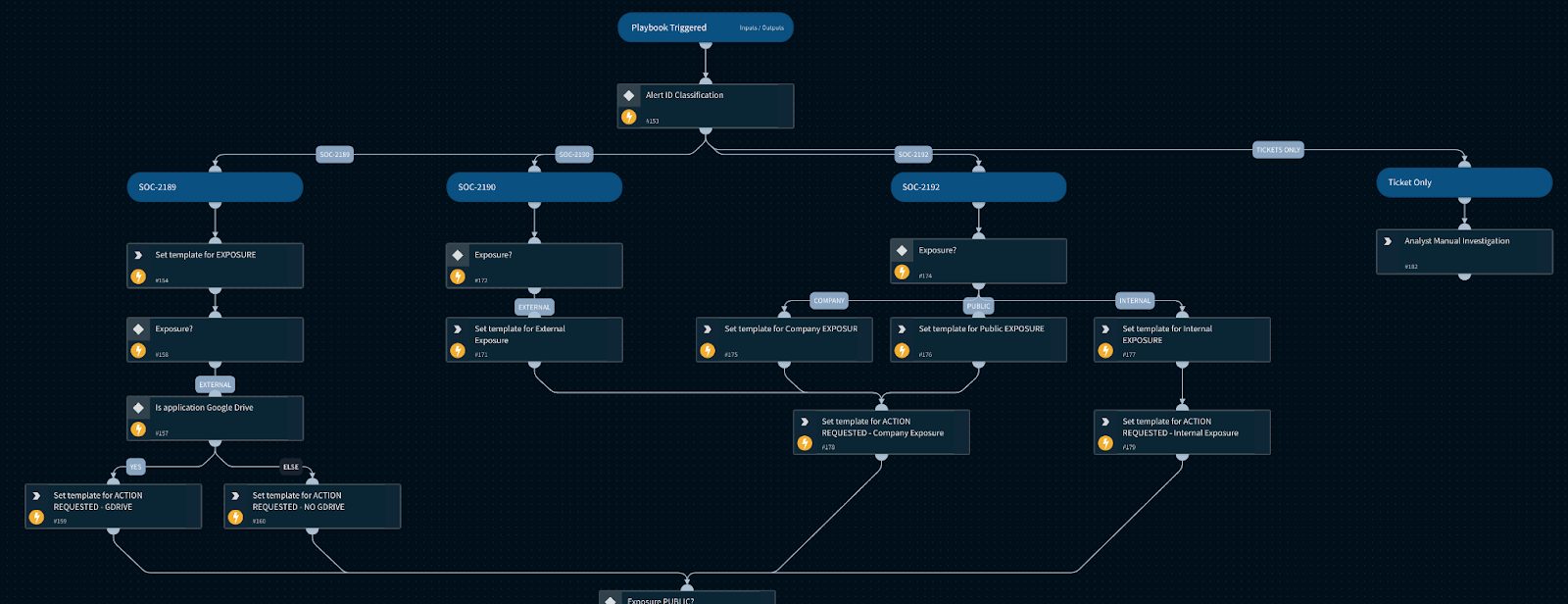

We put a lot of emphasis on building automation in Cortex XSOAR in our SOC because it helps with both speed and consistency of our response actions. The other reason automation is so important in the SOC today is that the number of alerts the SOC team has to deal with continues to grow very rapidly. It’s impossible for a SOC team to function without some level of automated response.

We ingest DLP alerts into Cortex XSOAR and then automate responses depending on various factors, including: the severity of the violation, the type of data involved and how the data is exposed. The types of responses we automate include:

- Notifying the offending user and their manager

- Notifying the SOC to perform further investigation

- Notifying response partners like Legal or HR

The Cortex XSOAR playbook below takes the alert data and builds an automated email to send the violating user and manager.

What’s Next: Remediation Enhancements

Currently, any auto-remediation that is being performed is being done as part of the policy in SaaS Security API. It is able to take actions including quarantining or removing shared access to files or folders in SaaS applications. This will change when the SaaS Security Cortex XSOAR integration is launched (coming soon!) which will enable us to kick-off these remediation actions as part of a Cortex XSOAR playbook and vary the response action depending on the scenario.

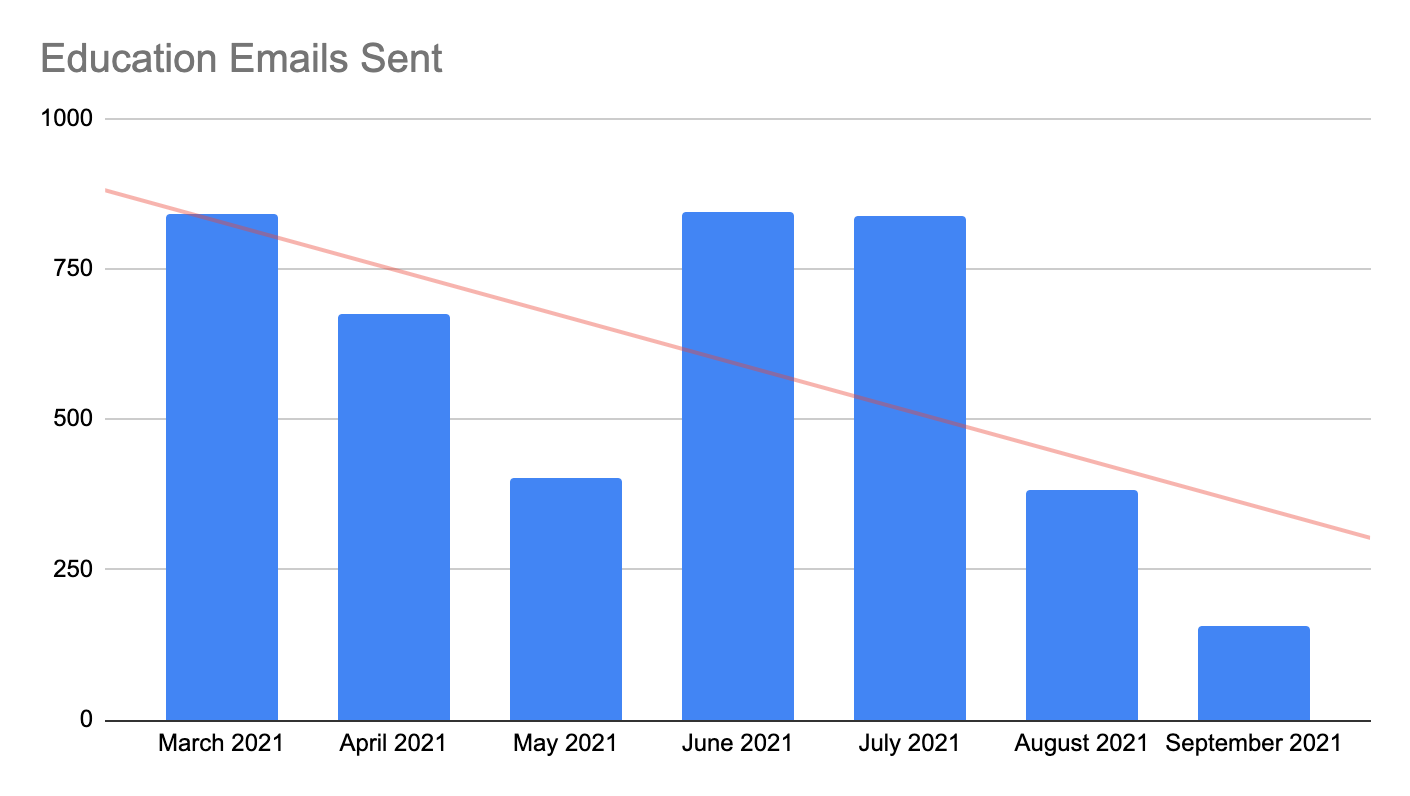

We are also leveraging the SaaS Security API Remediation Digest email to notify users of file sharing activity that could be done more securely, without making a ticket for the SOC to investigate. We have elected to use this email notice instead of sending the alert to Cortex XSOAR for scenarios where we don’t expect a response from the user; it’s purely for educational purposes to help them go about their day-to-day. These notifications have demonstrated a significant change in user behavior and helped improve security posture.

Data Loss Prevention can take on many forms depending on the needs of the organization, but like many of the information security challenges we face today there are concrete strategies we can employ to help make it simpler. Beginning with policy allows us to outline the basic requirements and then implement detective and preventive controls around them. Lastly, automation is a key component for implementing a successful and scalable DLP program. As security teams face a rapidly evolving threat landscape, investing in a strong data protection strategy today will help make our mission much more achievable in the future.